Accelerating the AI Revolution: Thoughts from NVIDIA GTC 2025

Posted by Frank Yang on Mar 24, 2025

I'm excited to be back at NVIDIA GTC for the second time! This year, I came in with three burning questions that I'm eager to explore during the conference:

- Where is AI heading?

- What impact will AI’s evolution have on industries?

- What market opportunities will emerge along the way?

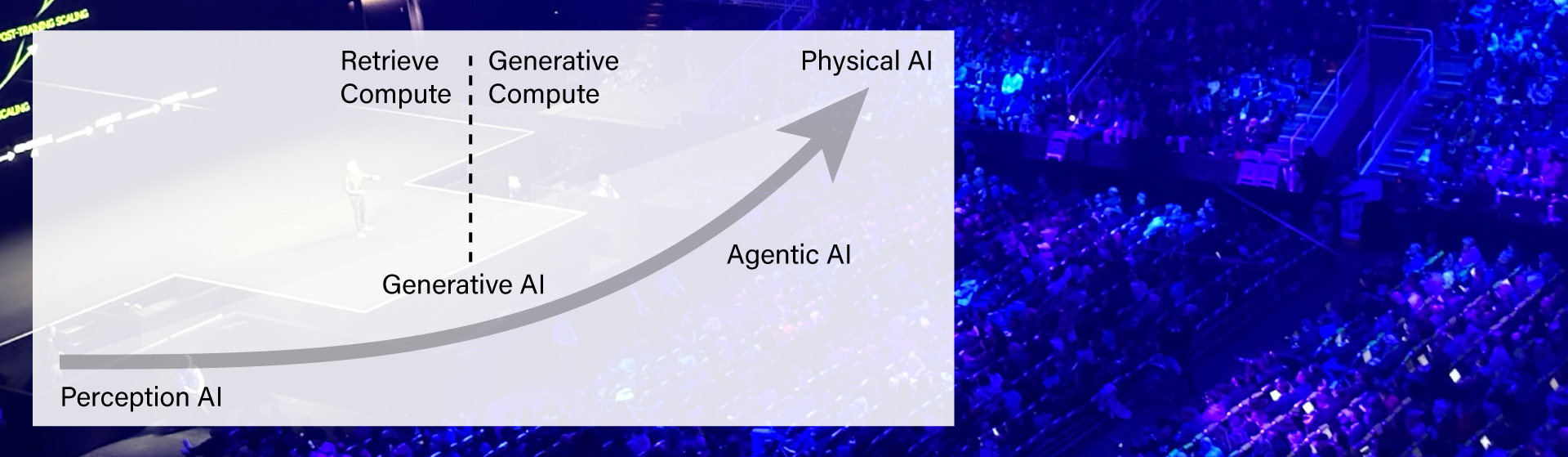

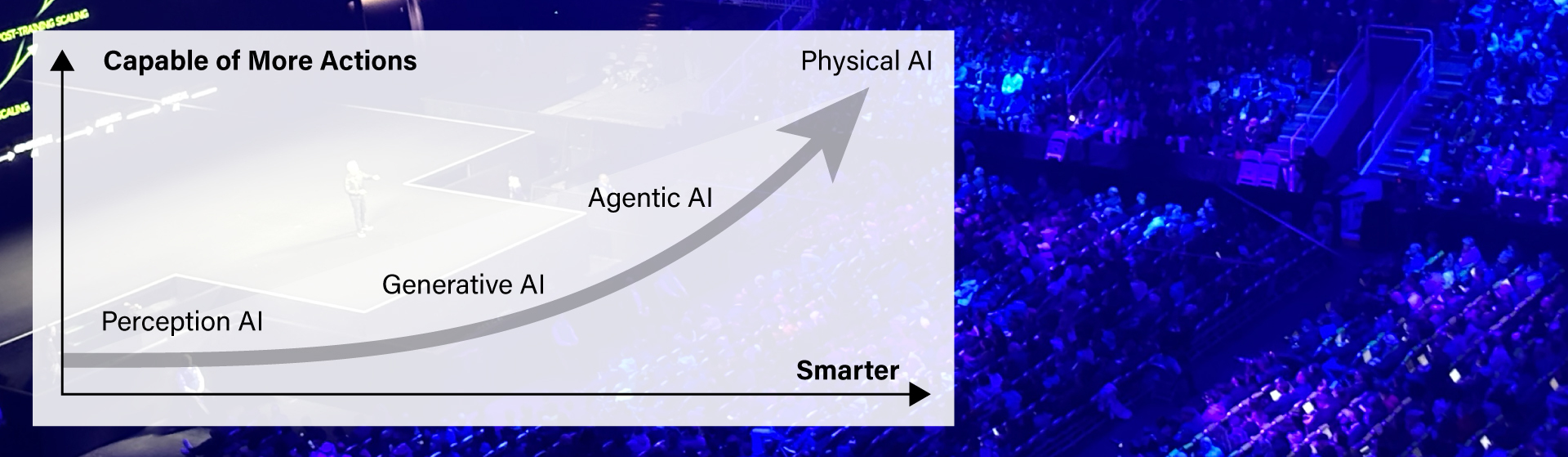

NVIDIA’s Founder and CEO, Jensen Huang, provided a clear roadmap for AI’s future in his keynote, breaking down AI’s evolution into four key phases: Perception AI, Generative AI, Agentic AI, and Physical AI. Mr. Huang said, “each phase opens up new market opportunities.” They are also significantly impacting the infrastructure and technology landscape, particularly in compute, networking, and connectivity.

First, let’s discuss the four phases of AI:

- Perception AI empowers machines to interpret and understand the world through sensory data. This includes computer vision, enabling AI to analyze and recognize images and videos, and speech recognition, which allows it to process and understand verbal communication.

- Generative AI can understand context, process user inputs, and generate new content based on its learned knowledge. It can create text, images, code, music, and more by synthesizing patterns from vast datasets, making it capable of producing human-like responses and creative outputs.

- Agentic AI goes beyond perception and generation by reasoning, planning, and taking actions autonomously. It can understand complex tasks, strategize solutions, and execute actions using tools, sub-agents, or other AI models. This enables AI to function as an autonomous problem solver rather than just a passive responder.

- Physical AI serves as the foundation for robotics, enabling machines to interact with the physical world. It understands real-world physics concepts like friction, inertia, and three-dimensional space, allowing robots to navigate environments, manipulate objects, and perform tasks that require real-world adaptability.

As AI evolves, it becomes increasingly intelligent and capable of handling more complex tasks. Its expanding abilities allow it to analyze data, make informed decisions, and continuously learn from experience with greater efficiency. With each advancement, AI grows more adept at understanding, reasoning, and taking action, unlocking new possibilities across various domains.

As AI capabilities advance at an unprecedented pace, the real challenge—and opportunity—lies in building the compute, storage, and networking infrastructure needed to sustain this transformation. This infrastructure must not only scale up to handle increasing workloads but also scale out with flexibility and upgradability to support evolving AI demands.

AI workloads require not just more computing power, but also efficient distribution. This involves:

- Scaling Up: Leveraging higher-performance GPUs with larger and faster memory to handle increasingly complex models.

- Scaling Out: Distributing compute resources across racks and PODs, necessitating high-bandwidth, low-latency, and non-blocking interconnects to ensure seamless parallel processing.

Evolving Networking Needs for AI Data Centers

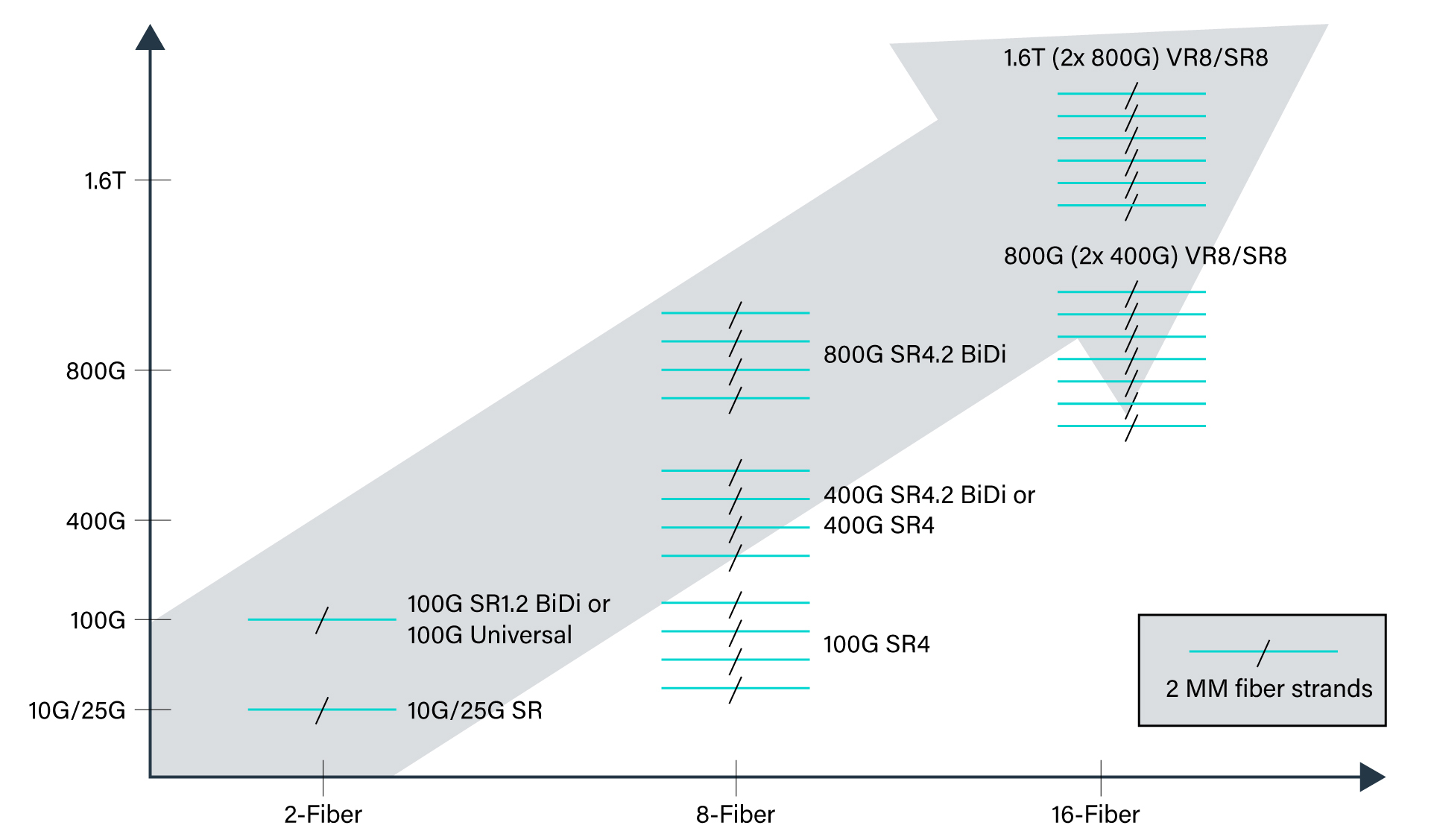

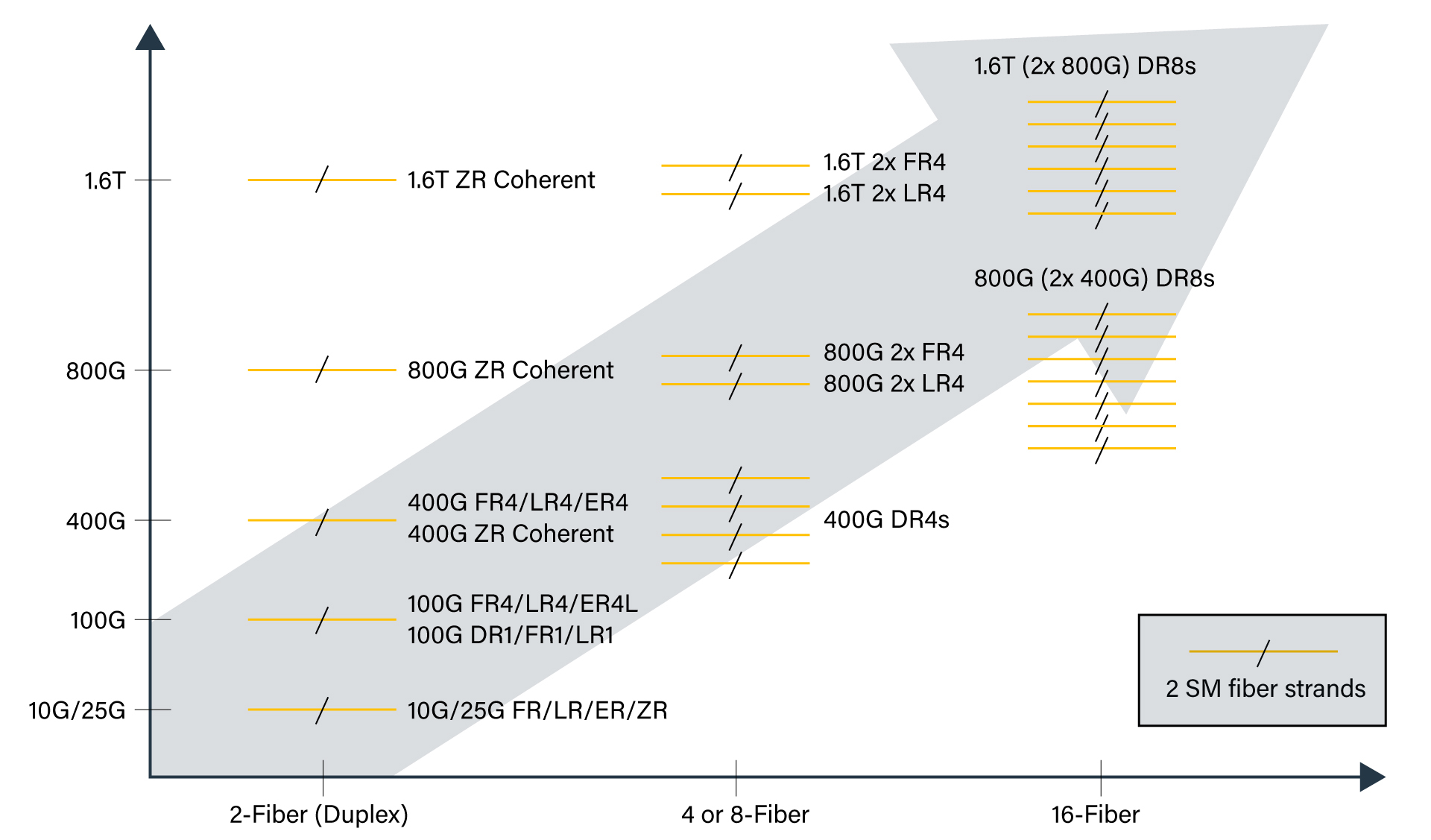

As AI workloads scale, the demand for 400G, 800G, and 1.6T optics is rapidly increasing. To support massive AI models and distributed computing, AI data centers require:

- Non-blocking architectures to efficiently move massive AI training datasets.

- Ultra-low latency for real-time, all-to-all collective processing.

- Scalable, high-efficiency interconnect solutions to handle exponential data growth.

Additionally, AI data centers are shifting towards 4x multi-fiber connectivity, enabling faster and more efficient data movement across GPUs, TPUs, and other accelerators. Traditional Ethernet-based networking architectures are increasingly insufficient, driving the adoption of AI-optimized fabrics with high-bandwidth, low-latency interconnects. These advanced networking solutions, powered by high-speed optical transceivers and fiber interconnects, are now mission-critical for sustaining AI’s relentless data demands.

Market Impact and Opportunities

The shift in AI infrastructure is creating significant market opportunities:

- Optical Transceivers and High-Speed Interconnects: As 800G and 1.6T optics become the backbone of AI networking, demand for next-generation optical transceivers will surge. Companies investing in power-efficient, low latency, and AI-optimized transceiver solutions are poised to lead the market.

- Next-Gen Compute Architectures: The rapid evolution of AI is driving the need for new compute architectures, from advanced GPUs and AI accelerators to domain-specific processors. Businesses developing custom silicon tailored for AI workloads will gain a substantial competitive edge.

- Scalable, AI-Optimized Networking: Traditional data center networks must evolve into scale-out, AI-driven fabrics. Innovations such as pluggable optical transceivers, co-packaged optics (CPO), and network-on-chip (NoC) architectures will provide diverse, high-performance networking options.

AI is not only advancing—it's demanding a fundamental rethinking of infrastructure. As AI models become more sophisticated and workloads grow more complex, the companies that provide flexible, scalable, and high-performance compute and networking solutions will shape the future of this revolution. The AI race is on, and the infrastructure innovators will define the next frontier.