Speed Up High-Performance Computing with 200G InfiniBand HDR

Posted by Frank Yang on Mar 2, 2023

The fastest supercomputers today run at an Exaflop per second. And, yes, that’s as fast as it sounds.

A “flop” is an addition or multiplication of 64-bit floating point numbers, and an Exaflop (EXA Floating point OPerations per Second) is a billion-billion (10^18) ? a quintillion — floating point operations per second.

The speed of a supercomputer is an aggregated number contributed by many central processing units, graphic processing units and/or data processing units that are connected through networks. In a previous article about InfiniBand (IB), we saw that it is a technology very suitable for building networks for supercomputing or HPC. InfiniBand technology has advanced to 200G HDR and 400G NDR.

What Is InfiniBand?

The InfiniBand technology is a computer networking communications standard used in HPC that features very high throughput and very low latency. Designed to be used for data interconnect both among and within computers, InfiniBand can be either a direct or switched interconnect between servers and storage systems, and is scalable, using a switched fabric network topology. It helps to make fast faster.

Applications and Use of InfiniBand HDR

Offering both in-chassis backplane applications as well as through external copper and optical fiber connections, InfiniBand leverages switched, point-to-point channels with data transfers.

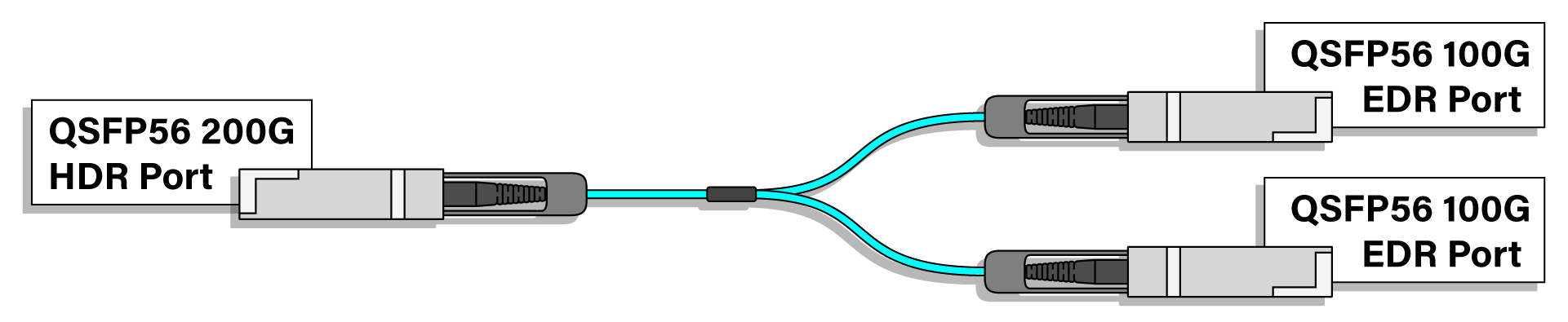

Available in QSFP56 200G to 2x 100G QSFP56 InfiniBand HDR-compatible active optical breakout cables (AOC Breakouts), as well as QSFP56 to QSFP56 200G InfiniBand HDR Active Optical Cables (AOC), only minimal processing overhead is required, and is ideal to carry multiple traffic types (clustering, communications, storage, management) over a single connection.

Be aware the 100G end of the AOC breakouts require a QSFP56 100G port (not a QSFP28 port). The 200G to 2x 100G AOC breakouts enable customers to build 100G server access networks with good port density, and they will have the ability to migrate to 4x 100G when the switch is ready in the future.

Benefits of IB to Improve Existing Systems

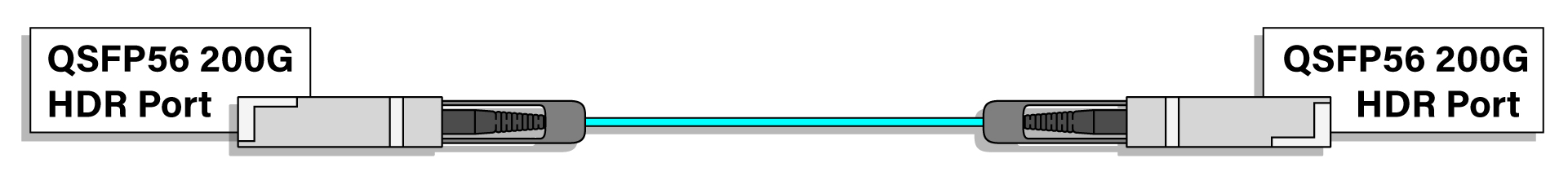

The QSFP56 200G InfiniBand HDR AOC can be used to interconnect switches, or switches to servers. These two types of IB HDR AOCs provide the benefits of high bandwidth, cost effectiveness, flexibility in cable reach, low latency and quick deployment.

AOC fiber cable can result in a small bundle size that is very beneficial for airflow cooling in the data center.

AOC Breakouts

AOC

Other Advantages of InfiniBand HDR

Most of the world’s commercially available supercomputers use InfiniBand HDR, representing 77% of new HPC systems. This means it is at the center of an ecosystem that includes innovative and cost-effective cabling, open-source software distribution from the OpenFabrics Alliance, and long-haul solutions that reach outside the data center.

With measured delays of 1µs end-to-end, the ultra-low latencies greatly accelerate many data center and high-performance computing (HPC) applications. InfiniBand also provides direct support of Remote Direct Memory Access (RDMA) and other advanced reliable transport protocols to enhance the efficiency of workload processing.

The InfiniBand Host Channel Adapters (HCAs) and switches create a compelling price/performance advantage over alternative technologies since they are very competitively priced.

Consolidating networking, clustering, and storage data over a single fabric which significantly lowers the overall power, real estate and management overhead required for servers and storage, InfiniBand offers fabric consolidation and low energy usage.

With reliable and stable connections, InfiniBand enables fully redundant and lossless I/O fabrics, automatic path failover and link layer multi-pathing abilities to meet the highest levels of availability.

Since data integrity is critical, InfiniBand performs Cyclic Redundancy Checks (CRCs) at each fabric hop and end-to-end across the fabric to ensure the data is correctly transferred.

At the center of a rich, growing ecosystem that includes open-source software distribution, innovative and cost-effective cabling, and long-haul solutions, InfiniBand reaches outside the data center and across the globe.

With compliance testing conducted by the IBTA, combined with interoperability testing conducted by the OpenFabrics Alliance, InfiniBand offers results in a highly interoperable environment, which benefits end users in terms of product choice and vendor independence.

Supercomputing and InfiniBand networks are being used to support artificial intelligence, machine learning, and more. It will be interesting to see the new areas engineers integrate and expand upon them in the future.

Reach out to your Approved Networks representatives and ask how our 200G InfiniBand AOC product may help you build InfiniBand networks to support your supercomputing applications.