AECs: Interconnect Legacy 100G Ports to 400G Ports

Posted by Frank Yang on Jul 29, 2022

You may have heard of an acronym making its way around the data center and optical network environments: AEC.

Active Electrical Cables are one of the latest technologies for upgrading and streamlining data transfer in data centers big and small. But what are they? And do you need them?

AECs are like super-charged DACs (Direct Attach Cables) that allow you to do more with less since AECs smaller and easier to install. They are garnering interest because they allow data centers to upgrade their cabling without replacing equipment. And they have special-case solutions for unique situations that don’t have any other resolution.

Here’s one example: Let’s say you have 100 Gigabit Ethernet (GbE) servers in your data center network, and they are equipped with a dual port 100GbE QSFP28 Network Interface Card (NIC). You certainly could use 32-port 100GbE QSFP28 switches to connect these types of servers since they are widely available on the market.

However, the port density of the 100GbE switches is limited to 32 ports of 100GbE per 1 Rack Unit (RU).

How can you achieve higher port density for the 100GbE in order to save rack space and reduce per port cost? 400GbE switches are available. Can you deploy 400G to 4x 100G passive Direct Attach Copper (DAC) cable breakouts to interconnect the 400G switch port to the 100G server NIC, like the approach with 4x 10G breakouts? Unfortunately, the answer is “No”.

Why not?

To explain, there is some technological complexity we need to vet out. Buckle in, this will sound . . . complicated.

The 400GbE switch port on the market today is constructed with 8 electrical lanes and operates at 50 Gbps nominal speed per electrical lane with 4-level Pulse Amplitude Modulation (PAM4). So far, so good – right?

An electrical lane is a circuit transmitting and receiving the signals. The aggregated data rate of 8 lanes is 400 Gbps. The 400G ports could be in QSFP-DD or OSFP form factor.

Still with me? Good.

Now, most 100G ports in Top of Rack (ToR) switches, or server NIC cards operate at 25 Gbps nominal speed per electrical lane with Non-Return to Zero (NRZ) modulation -- especially those already deployed in the field since they are constructed with 4 electrical lanes.

To make a 400G port interoperate with a 100G port, the speed and modulation must match on a per-electrical-lane basis. The electrical lane operating at the speed of 50 Gbps and PAM4 modulation at the 400G end is not aligned with the 25 Gbps and NRZ modulation at the 100G end.

It’s like comparing a car lane with a bicycle lane. (My previous blog provides more details on this point.)

Therefore, we can’t simply use passive DAC, nor normal AOC breakouts, because they won’t solve the speed and modulation misalignment.

So, what can we do to interconnect the legacy 100G ports to 400G ports?

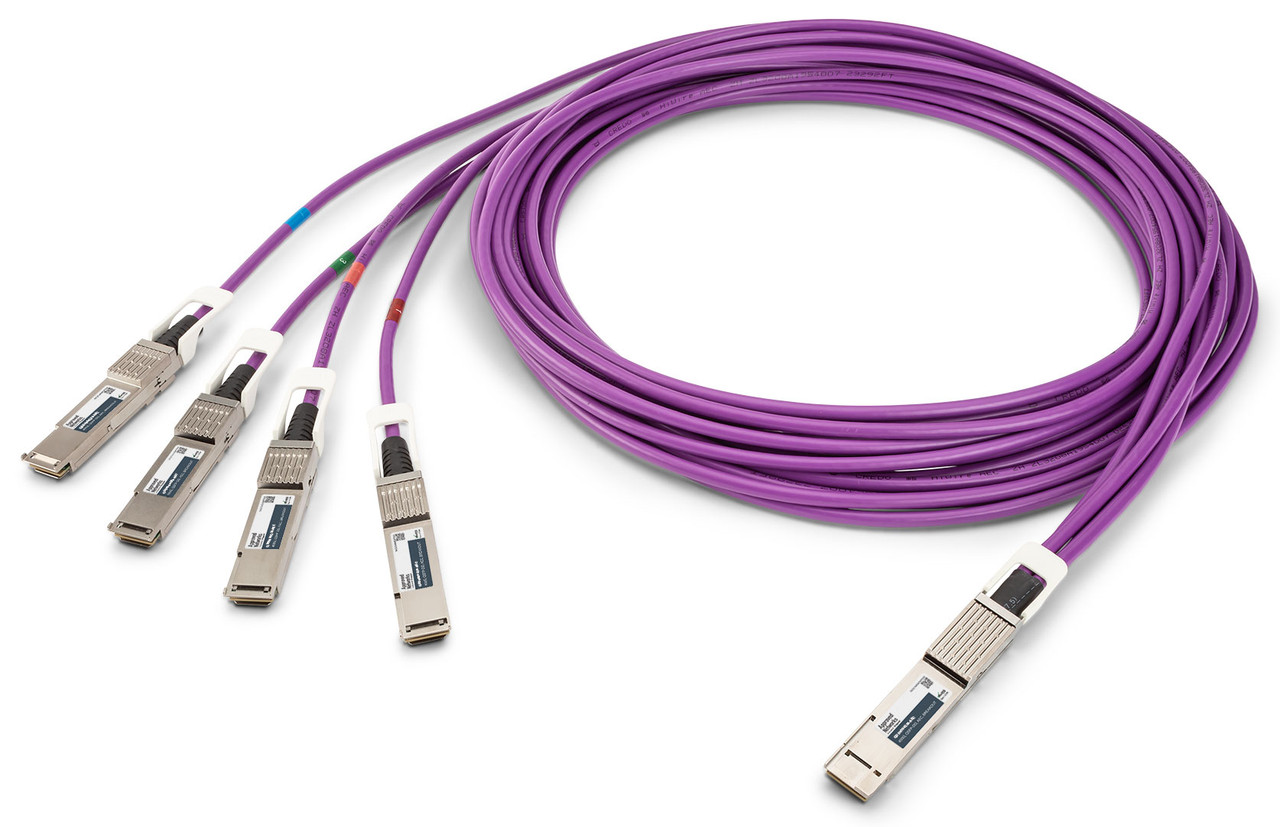

Introducing Active Electrical Cables to handle this interconnectivity. AECs integrate Digital Signal Processing (DSP) chips in the copper cable assembly. The DSP chips shift the speed between 50G PAM4 and 25G NRZ, change the number of lanes, and translate Forward Error Correction (FEC) from end to end.

For the 32-port 100GbE in 1 RU mentioned earlier, the AEC 4x 100G breakouts enable data center operators to quadruple port density to up to 128 port 100GbE in 1 RU space. This achieves high density 100G by interconnecting 400G switch ports to 100G QSFP28 ports.

AEC breakouts are available up to 5 meters long. This length will cover the distance range for intra cabinet/rack connections in data centers.

Besides 4x 100G breakouts, AECs are also available in 400G QSFP-DD to QSFP-DD straight AECs. These cables are up to 7 meters and are suitable for interconnecting 400GbE ports within a rack or across adjacent racks.

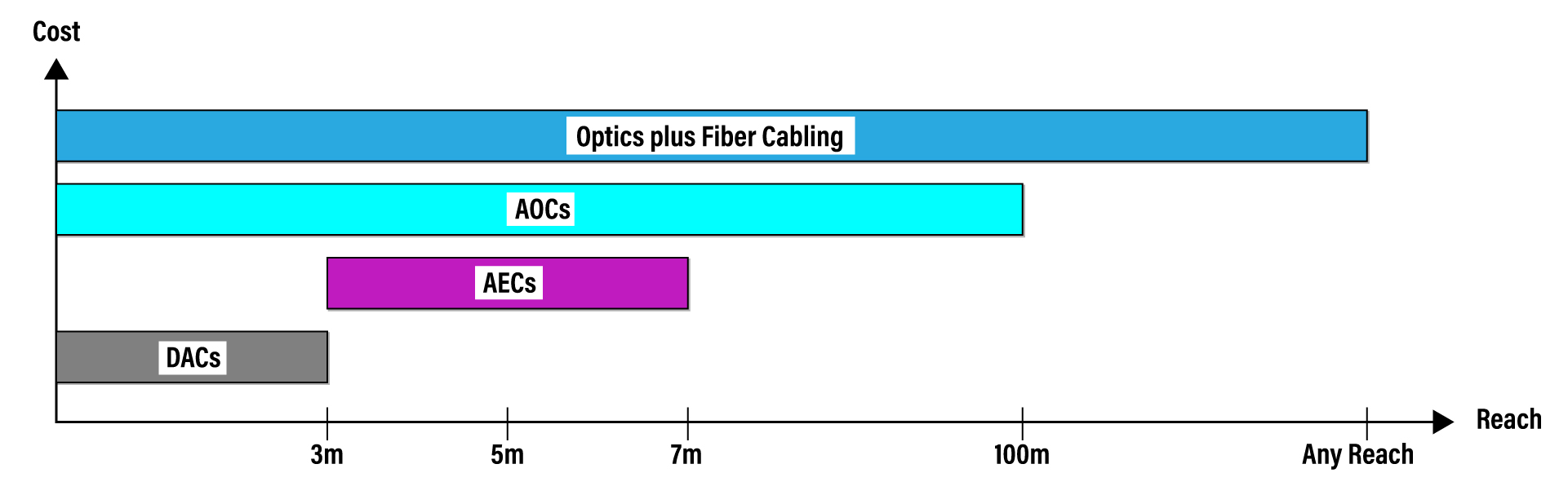

This illustrates QSFP-DD 400G straight AECs with other comparable 400G technologies in terms of relative cost and reach:

Per my calculations, 400G QSFP-DD AECs may consume 30% less power than 400G QSFP-DD AOCs with the same length. From a data center perspective, less power is always preferred.

AECs can help you achieve high port 100G density and beyond in your data center.