What is AI and why will it drive the need for more Transceivers, DACs, AOCs, and Fiber?

Posted by Kevin Kearney on Feb 26, 2024

It’s been just over a year since ChatGPT was made publicly available. According to a study by UBS Research, two months after its launch, the application reached over 100 Million users, making it the fastest-growing consumer app in history. A year later, ChatGPT has an estimated 180.5 million users.

This Artificial Intelligence (AI) thing has captured the world’s fascination. Mine too! I have seen and heard terms like Large Language Learning Models, Transformers, Generative AI, AI Inference, RDMA, ROCE, GPUs, and DPUs. Clearly, I had a lot to learn. So, this blog results from my effort to understand this technology better. I invite you to join me by reading further, and I hope you learn a little something from this effort.

What is Artificial Intelligence?

What Does AI really mean anyway?

Oxford and Google define AI or Artificial Intelligence as: “the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

Come to think of it, we have been interacting with some form of AI for quite a while. Alexa and Siri recognize our speaking voice and answer our questions. Many of us open our phones with Facial recognition. Google Translate improves my communication with my Legrand colleagues in France.

So, why has AI garnered so much attention in recent years? What makes ChatGPT different?

What does the name ChatGPT even mean? I understand the “Chat” part. We all have been interacting with Chat for years. I had no idea what the “GPT” meant. It turns out that this 3-letter acronym and what it represents holds the key to understanding the fundamentals of AI.

Generative, Pre-Trained, Transformer

Generative AI is what makes this technology a game changer. AI can now creatively generate new content based on the questions or prompts we provide. All of us who have interacted with ChatGPT have seen the amazing content it can create and the potential time it can save.

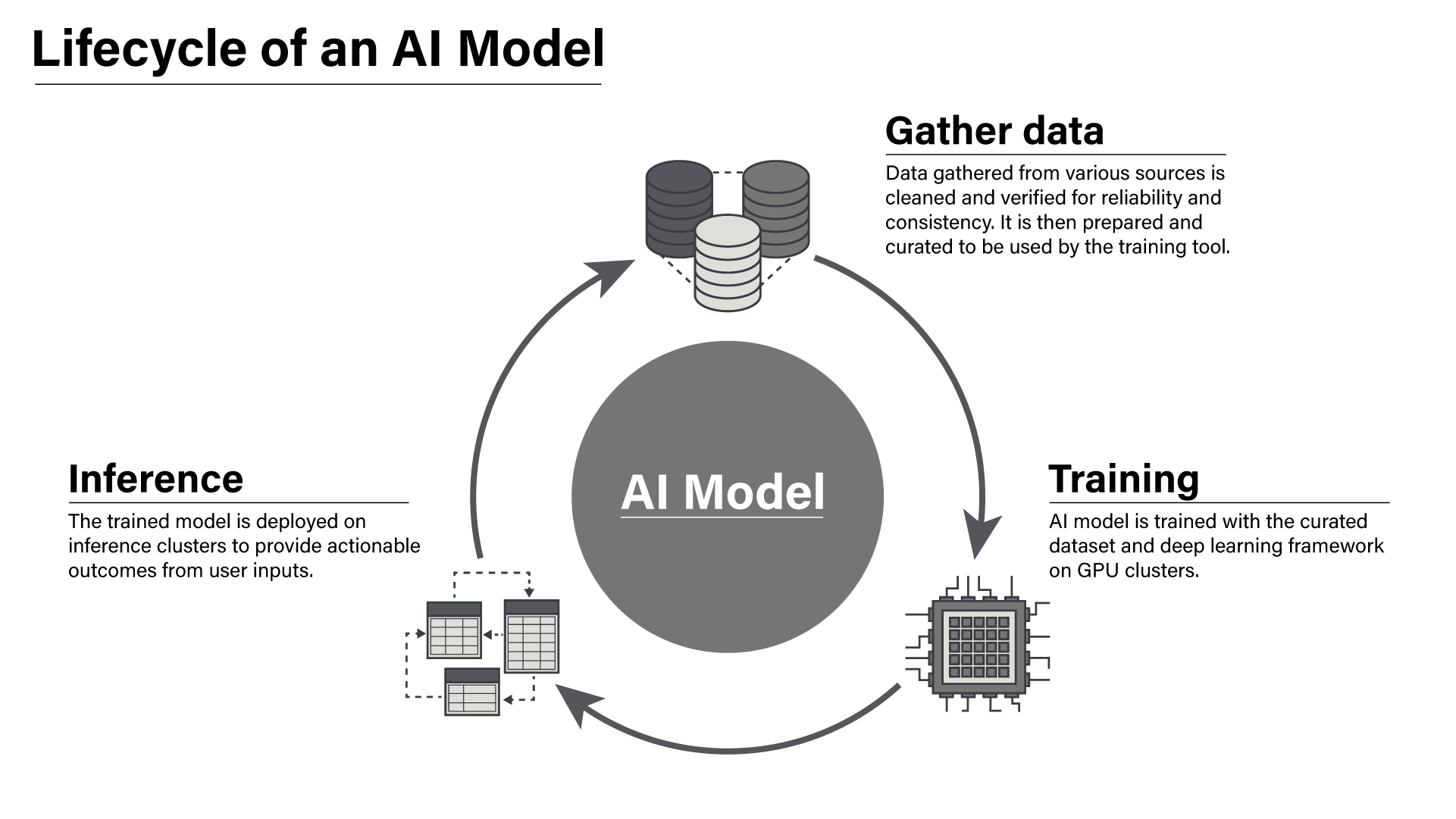

Pre-Trained Models are at the core of AI. This is where the real learning takes place. A Pre-Trained Model is designed to accomplish a specific task. The learning takes place much like we learn. For example, if we wanted to learn a new language, we would have to read and recognize many words and determine what the words mean. We would have to listen to others to see how the words are pronounced. Finally, we would have to practice a lot by speaking. With continued practice and feedback, we will improve until we can speak the language well. Pre-trained AI models go through the same process. It starts with a goal to accomplish. Then, gathering a massive amount of data related to that goal.

Transformers take that data and transform it into meaning and context. Transformers are mathematical formulas that find relationships in data and learn it’s context and meaning. During the training process, the Model encodes the knowledge it learns using learned variables known as parameters. The developers finally deploy the trained Model to users like us who make requests. Based on these requests, the Model generates content. The AI Model generates an inference-based response called the Inference Stage (a prediction based on the knowledge gathered in the training process).

AI requires unprecedented Compute, Networking, and Power!

Processing and training these large models require a lot of computing and high-speed networking. The leader in AI infrastructure, Nvidia, has been in the news a lot recently. If you are a gamer, you may be familiar with Nvidia as a supplier of awesome graphics cards.

Well, the GPUs (Graphics Processing Units) also work great for AI applications. GPUs use parallel processing and can do the mathematical calculations required for AI more efficiently than CPUs. Clusters of servers are all interconnected with switches and work in parallel to train these large models.

Our Product Manager, Frank Yang, shared the Nvidia DGX Super Pod Reference design, based on the DGX A100 server, in his previous blog post: Supporting AI with 200G InfiniBand AOCs for High-Performance Computing Networks. The DGX A100 Servers have 8 GPUs and require eight 200G InfiniBand HDR Connections Per Server.

Approved Networks has already launched the 200G AOC cables designed to work in that solution.

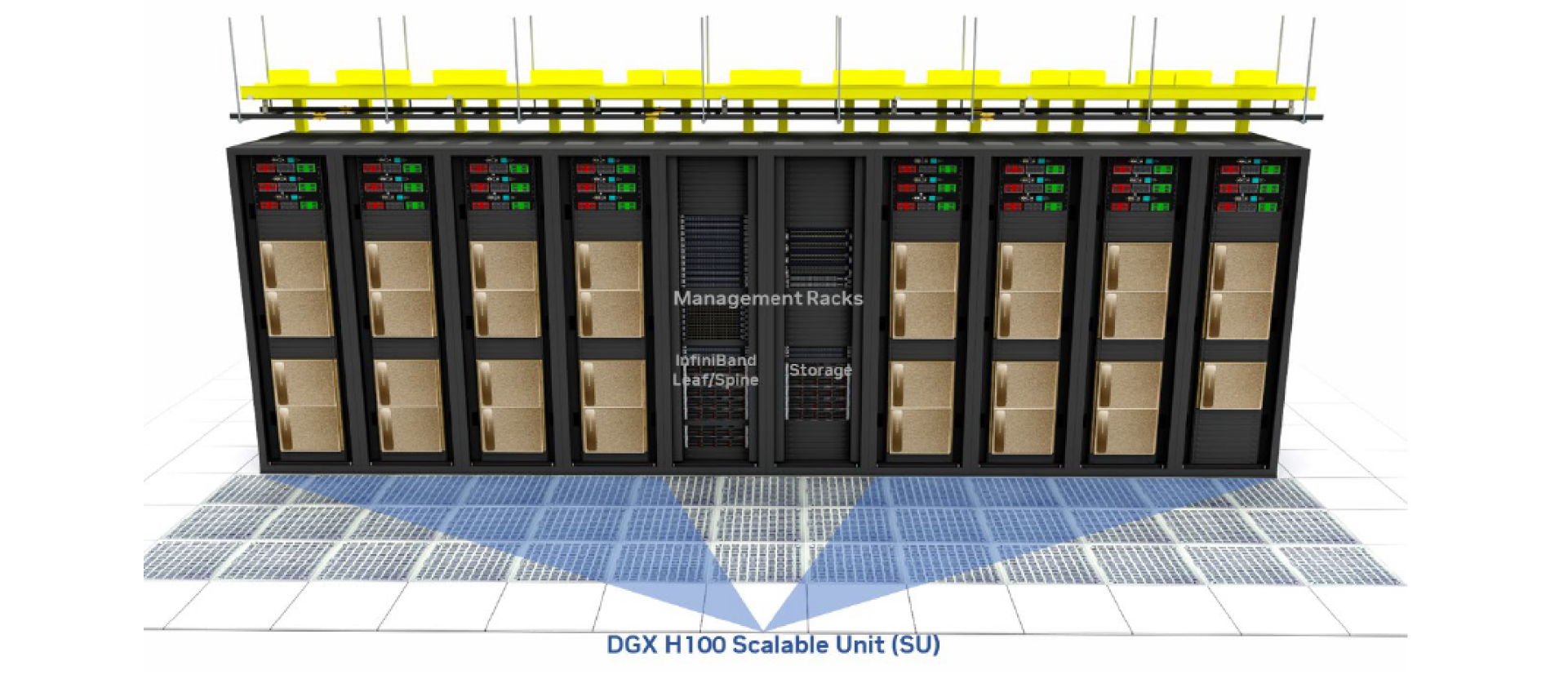

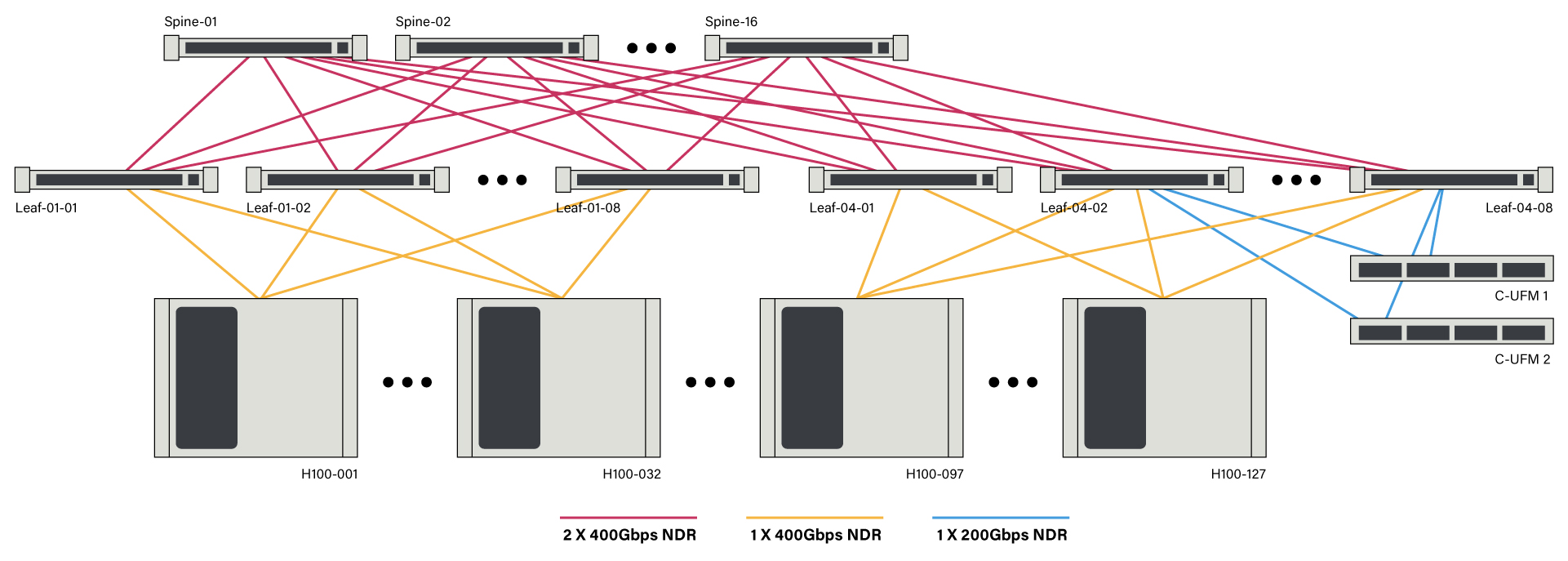

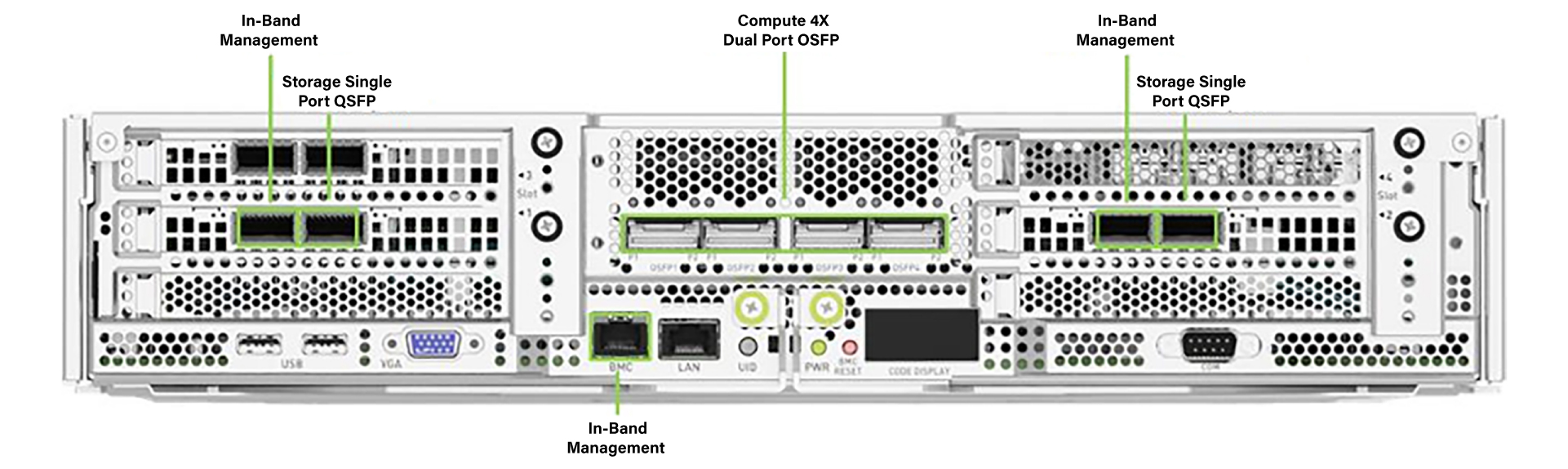

The latest Nvidia Reference design features their DGX H100 Servers, each including 8 GPUs and 4-Dual OSFP InfiniBand NDR interfaces.

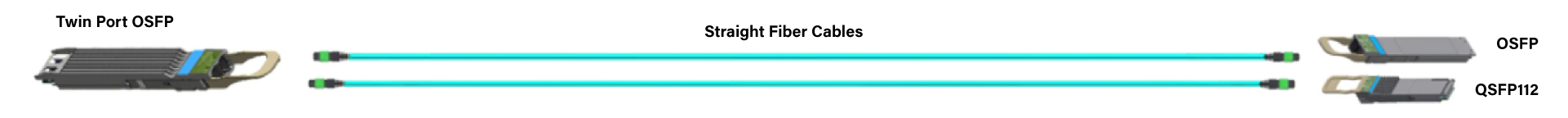

Each Scalable Unit of the Nvidia DGX Superpod consists of 32-DGX H100 Servers with 8 GPUs per server. The servers are clustered together in proximity, and all interconnected via 800G Dual Port MPO, 2X400G OSFP SR8 Multimode Transceivers, and MTP Cables.

Approved Networks has the 400G and Twin Port 800G Transceivers on our roadmap and expects these to be available in Q2 2024. Of course, we can offer the MTP Cables required too.

Ethernet or InfiniBand?

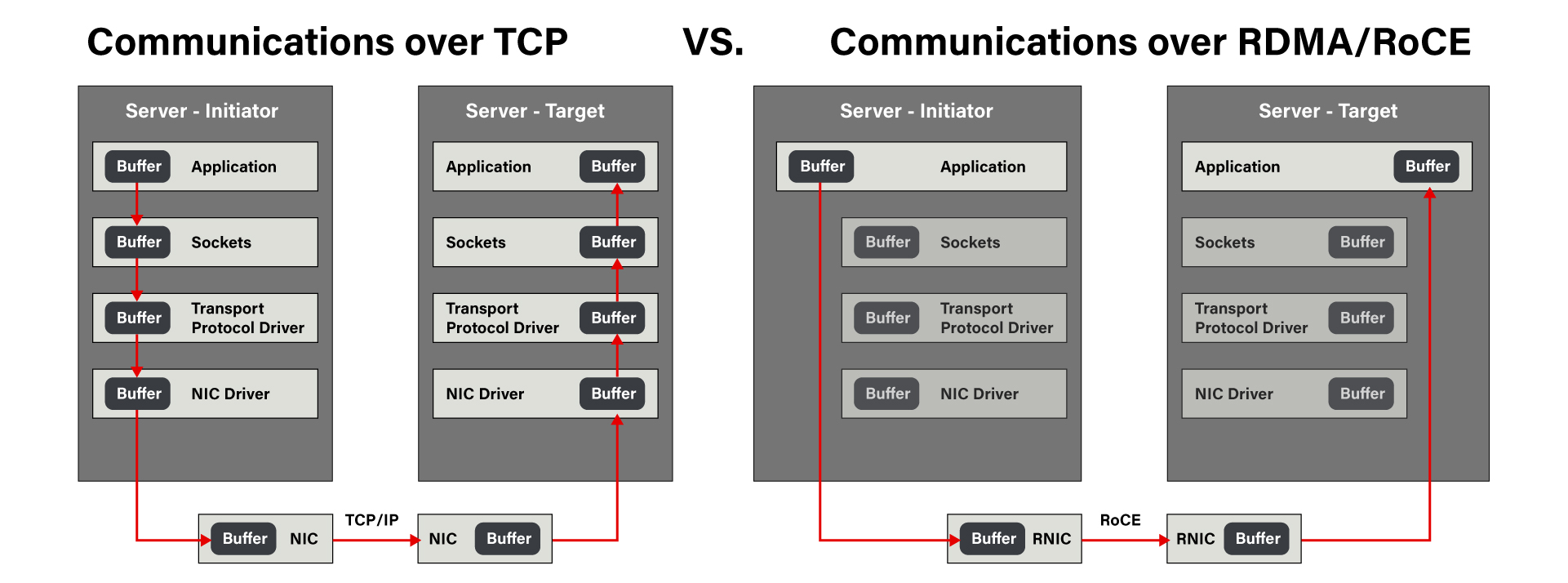

These AI training clusters require high-performance, low-latency connectivity. This is achieved by RDMA (Remote Direct Memory Access), which allows two GPUs on different nodes to exchange messages directly from memory, bypassing the server CPU and operating system.

For years, InfiniBand has had built-in RDMA. In 2014, they introduced RDMA over Converged Ethernet (ROCE, pronounced Rocky). So now AI training networks can use either InfiniBand or Ethernet.

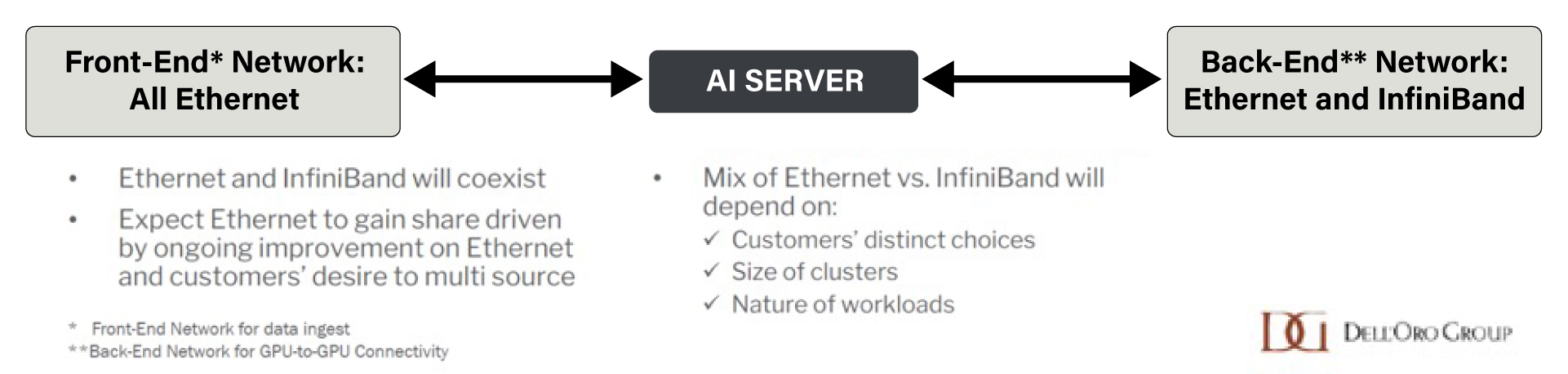

According to Dell’Oro Group, Ethernet and InfiniBand will coexist.

Whether you are implementing or upgrading an Ethernet or InfiniBand Network, Approved Networks is here to help. We have a large selection of Nvidia/Mellanox compatible solutions and will continue to add more. We look forward to hearing from you!